Engineering simulation across scales

Did you know?

- Engineers rely on computer simulations to model engineering systems, saving time and costs when prototyping

- Computational fluid dynamics, one of the most well-known modelling tools, is used to design Formula 1 cars, spacecraft and aircraft, among other engineering systems

- AI and machine learning-based models could save computational time and costs in modelling, but must be trained on high-quality data

Below the wings of the 67-metre-long Airbus A350, two Rolls-Royce Trent XWB turbofan engines work tirelessly. As the aircraft takes off, the turbines rotate up to 12,600 times every minute, producing between 70,000 and 100,000 horsepower. The engines draw in over 1,400 kilograms of air and burn some 100 kilograms of fuel every second.

Inside the combustion chambers of each massive engine, at the atomic scale many thousands of chemical reactions unleash energy that heats the turbulent gas mixture to about 1,700°C. As the hot gases expand, they push through and turn the turbine blades, generating thrust.

The A350, with its engines, is what engineers would call a multiscale system. This is where the phenomena involved – including chemical reactions, turbulent flow, heat transfer, aeroacoustics, and fluid–structure interactions – take place across different scales of space, time and organisation (see ‘Scale models’).

British Airways’ Airbus A350, with its twin Rolls-Royce Trent XWB turbofan engines. Insert shows computational fluid dynamics simulations of the flow and temperature fields of the turbines, which take place at the macroscale © Wang and Luo, Energies 17, 873, 2024

Scale models

What defines the macro, meso and microscales?

Macroscale – systems, phenomena or behaviours seen at large spatial or temporal scales. Collective or bulk properties dominate, and classical laws of physics apply.

Mesoscale – scales that lie between the micro and macro scales in a multiscale system.

Microscale – systems, phenomena, or behaviours seen at extremely small spatial or temporal scales. Here, individual atoms, subatomic particles, or quantum effects dominate.

Scales differ between systems. For a weather system, the mesoscale is measured in kilometres (thunderstorms), in between the microscale (turbulence) and the macroscale (large cyclones). For an aircraft, the structure of aircraft wings or landing gear, in metres, and their response to forces, are macroscale phenomena. Grain boundaries within a metal component that determine its strength are organised at the mesoscale in micrometres. The chemistry of jet fuel combustion occurs at the microscale, in nanometres.

These scales matter because phenomena and processes at the microscale and mesoscale influence behaviour at the macroscale. For the A350, atomic-scale chemical reactions and mesoscale vortical airflow feed into the thrust that lifts the jet.

When designing anything, be it a plane, battery or a biomedical device, engineers must account for phenomena across multiple scales. They make increasing use of computer simulations to navigate their way through those different scales. Simulation methods for each scale range are an essential part of the engineering toolkit, reducing the need for costly prototypes and physical experiments (see ‘Tools that speed up design’).

The challenge is to pick the right tool for the scale you want to model, and to balance the desire for accuracy against the speed and cost of computation. In turn, that choice depends on how much data is available to create those computer models and how detailed it is.

Software is king

The modelling tools that speed up the engineering design cycle

The A350 has about a million components and subcomponents of different sizes. Each engine alone contains over 20,000 components. Every component is painstakingly designed, tested and optimised to ensure performance, reliability and safety.

If engineers used only conventional (physical) lab tests, it would take decades between beginning a design and passengers eventually flying in the aircraft. Computer-aided modelling and simulation significantly shortens the development cycle and reduces R&D costs of new aircraft.

The software tools that help engineers to design and test products include computational fluid dynamics (CFD), computer-aided design (CAD), computer-aided engineering (CAE), computer-aided manufacturing (CAM), and so on.

According to market research firm IoT Analytics, since 2022, an average manufacturer would have spent more on industrial software that engineers use to design products than on the industrial automation hardware that goes into realising their designs. By 2027, manufacturers could be spending twice as much on software as on hardware.

In recent years, engineers have shifted from modelling at individual scales in isolation, to multiscale modelling that accounts for phenomena across scales and shows how the different scales interact with one another. But this takes an enormous amount of computational power, if using physics-based modelling.

This is where data-driven modelling could come in. Machine learning

(a subset of artificial intelligence) can learn from large amounts of pure data, making it agnostic to the underlying physics and scales. With enough high-quality data, data-driven machine learning algorithms can be trained to simulate across scales at a fraction of the compute costs of conventional multiscale modelling.

Computational fluid dynamics simulations are widely used to design engineering systems such as Formula 1 cars © Shutterstock

Macroscale simulation: for planes, trains and automobiles

Perhaps the best-known macroscale modelling tool is computational fluid dynamics (CFD). Based on the laws of physics and born out of pioneering work in the late 1960s by Professor Brian Spalding FREng FRS and his colleagues at Imperial College London, CFD has become a mainstream tool in modern engineering. Today, all branches of engineering use CFD to analyse, predict, design, and optimise engineering systems such as aircraft, Formula 1 cars, engines, submarines, and spacecraft.

Zooming back in on the example of a plane, the flow of air over its airframe and combustion in the engines are chaotic, highly turbulent and invisible. Engineers must harness these processes to optimise engine and aircraft performance – a formidable challenge. This is where CFD comes in: it allows them to predict and visualise turbulent flow and combustion.

In reality, every system consists of billions of molecules and atoms, but CFD models their average behaviour. It does this by treating fluid flow and even combustion as a continuum. Then, it applies physical laws on a computational mesh consisting of small, discrete elements to predict flow or combustion properties and how they interact with structures.

However, modelling these processes is difficult. The processes involved can span nine or more orders of magnitude in space and time. Key features can range from nanometre-scale through to metre-scale and can unfold over femtoseconds (that’s 10-15 seconds) or hours. (Physicist Richard Feynman famously called turbulence “the most important unsolved problem of classical physics”.)

Microscale simulation: for co-designing fuel and engine

For any aero engine, critical processes happen at the atomic and subatomic scales, which can influence engine performance and emissions.

Burning kerosene, the most common aviation fuel, sets off over 5,000 reactions at any instant. It generates over 1,000 intermediate chemical species, some of which form pollutants such as nitric oxides. These chemicals react inside the combustion chamber at temperatures of about 1,700°C, under about 50 times atmospheric pressure, with extremely unsteady and turbulent airflow. Conventional CFD may be powerful, but it cannot directly capture these processes, because it averages the behaviour of large numbers of molecules and atoms to obtain macro properties.

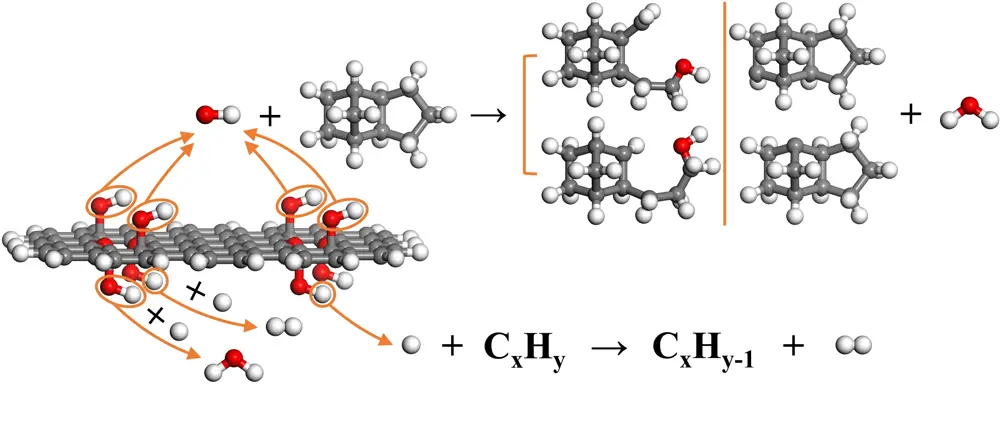

To understand this seeming chaos, engineers use tools that can model matter at the level of individual atoms and molecules. The most well-known is molecular dynamics, which uses Newton’s second law of motion to calculate the trajectories for individual atoms and molecules. A newer development, reactive molecular dynamics, can simulate chemical bond breaking and forming, and consequently chemical reactions.

To understand this seeming chaos, engineers use tools that can model matter at the level of individual atoms and molecules. The most well-known is molecular dynamics, which uses Newton’s second law of motion to calculate the trajectories for individual atoms and molecules.

This type of modelling could come in handy to help the world shift to zero- and low-carbon fuels by facilitating the design of carbon-neutral new fuels, collectively called e-fuels, and catalysts. However, e-fuels, as well as zero-carbon fuels such as hydrogen and ammonia, burn very differently from kerosene. If an engine designed to burn kerosene burns hydrogen or an e-fuel, combustion is likely to become unstable and risk damaging the engine.

Engineers can employ reactive molecular dynamics to design new fuels while using CFD to design new combustion engines, a process called co-design. In the context of transition to carbon neutrality, a variety of new fuels – such as e-fuels and biofuels for aviation, and metal fuels for power generation – may be explored. It is crucial that fuels and engines should be co-designed as a system, rather than treated separately as in the past. Here, atom-level, microscale simulations will play an important role in design for new fuels and virtually testing the properties of new fuels.

Molecular dynamics simulation of chemical reactions of aviation fuel JP-10 with catalytic enhancement of ignition and re-ignition © Feng et al., Fuel 254, 115643, 2019

Mesoscale simulation: bridging the gap

Bridging the microscale of chemical reactions and the macroscale of a turbofan engine, there are numerous intermediate scales, called mesoscales. The way systems behave at these scales is the most complex and least well understood. Yet mesoscale processes play a key role in determining a system’s overall dynamics and performance.

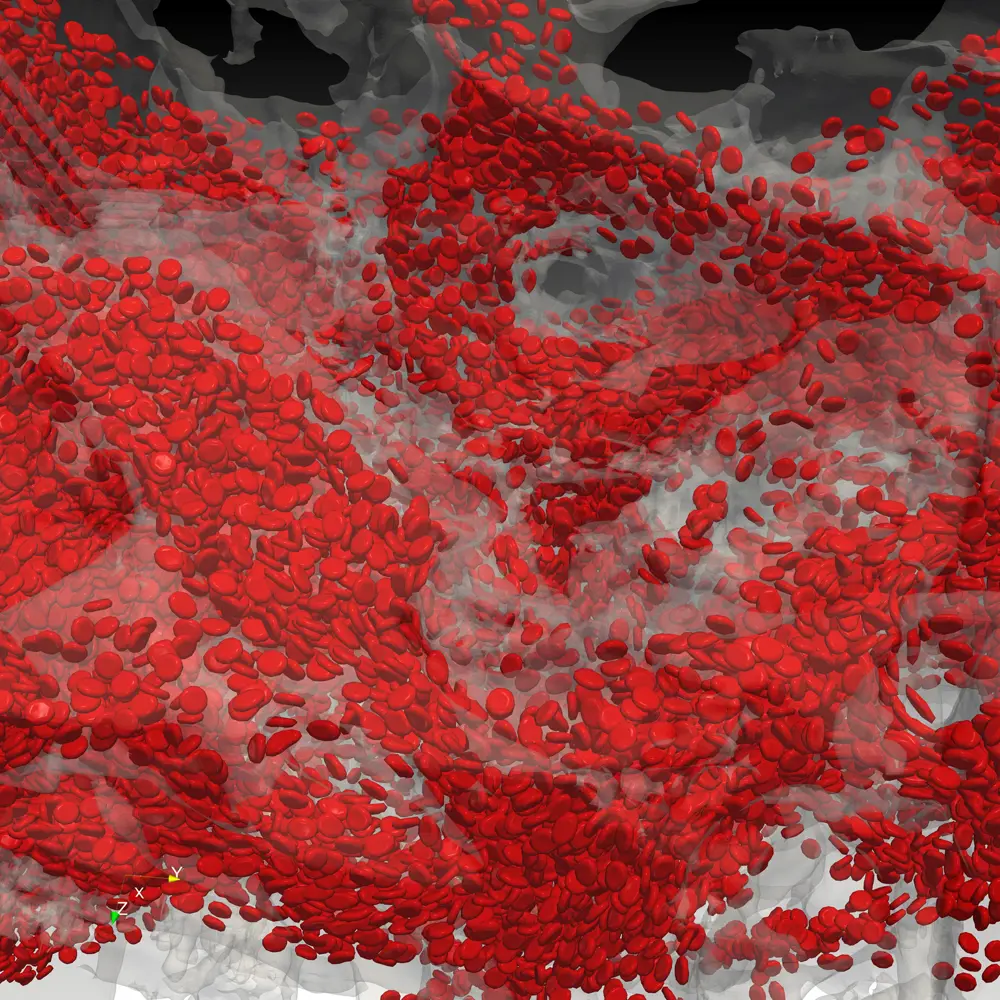

For example, in materials, mesoscale defects and grain boundaries influence macroscale properties, such as strength, elasticity, durability, and conductivity. There are also multiphase flow systems – mixtures of liquids, gas and solids – where mesoscales lie at the phase interfaces, whose thickness is actually in micrometres or nanometres. This is a challenging modelling problem relevant to many areas – for example, cosmetics manufacturing, where the phases are oil and water. Another multiphase system is blood, which contains deformable red blood cells floating in liquid plasma.

Engineers typically use the lattice Boltzmann method (LBM) to simulate fluid flows at mesoscales. It’s based on a discrete form of the Boltzmann equation, which underpins much of our understanding of the statistical behaviour of thermodynamic systems. This method has the flexibility to bridge a very wide range of scales, as it is both able to simulate small clusters of molecules (approaching the microscale), as well as approximate the collective behaviour of large numbers of molecules (towards the macroscale).

In materials, mesoscale defects and grain boundaries influence macroscale properties, such as strength, elasticity, durability, and conductivity.

The UK Consortium on Mesoscale Engineering Sciences (UKCOMES) champions the development and use of LBM as a mesoscale simulation tool. Its work covers net-zero energy systems, advanced manufacturing, multiphase flows and healthcare, among other areas. Funded by the Engineering and Physical Sciences Research Council (EPSRC) and launched in 2013, UKCOMES takes a multidisciplinary approach with close interaction between researchers in physics, chemistry, biology, mathematics, engineering, materials science, and computer science.

Cellular-level simulation of maternal blood flow through the intervillous space of human placenta © Qi Zhou, The University of Edinburgh

LBM is an active and growing area of research. Because of the way that LBM algorithms are structured, it works very well on GPUs, powerful computer chips developed and widely used for AI today, and many emerging computer architectures. Thanks to this and its ability to bridge scales, LBM has found use in numerous fields. For example, researchers at University College London have used LBM to simulate the charging and discharging of lithium-ion batteries to help to optimise electrode design, which can impact how long batteries last, among other properties.

The LBM approach also has significant advantages in simulating multiphase flow, as it allows phase interfaces to move, deform, break, and merge naturally. In one recent example, a team from the universities of Edinburgh and Manchester simulated blood flow, including red blood cell transport, through the intricate placental tissue, which could help physicians to diagnose placental disorders.

Bringing in data-driven modelling

The idea that machine learning can bypass explicitly written physics laws and scales, relying on pure data, represents a paradigm shift. It brings significant benefits as well as uncertainties.

Machine learning has already been used to accelerate calculations in combustion chemistry, often by two to three orders of magnitude. At the atomistic level, machine learning has led to molecular dynamics simulations of much larger systems and for longer times.

One company aiming to accelerate macroscale CFD simulations with AI is Austrian startup Emmi, which raised €15 million in seed investment in April 2025. The founding team has developed a transformer model – the same type of deep learning model as OpenAI’s large language models – that learns physical laws from data to make faster predictions about fluid flow.

While there are some successful applications in the works, the biggest problem facing data-driven modelling is a lack of quality data. Large language models have relied on huge training datasets – petabytes of text, while Google DeepMind’s GraphCast weather forecasting model, a different type of neural network, was trained on decades of meteorological data. For many engineering systems, data are proprietary, and we don’t have such rich archives of data on which to train AI models. The quality and consistency of data can also affect the accuracy of predictions.

One important source of training data for data-driven models is the outputs of individual physics-based models, as long as they are sufficiently accurate. This works well in the case of multiscale systems, which are often very difficult to measure experimentally. For example, measuring how the grain boundaries in metal move would involve cutting up the metal – which would then render it impossible to measure its properties. With high-quality modelling data, we can both better understand the physics, as well as using it as an input into data-driven systems – where good data is vital. Therefore, physics-based and data-driven modelling approaches are both complementary and competitive.

But while it has its advantages, data-driven modelling does not depend on a deep knowledge of underlying physics, so it becomes more challenging to interpret the results. Finally, the cost of training models is still too high, although the emergence of efficient large models such as DeepSeek may offer hope for cost savings.

While there are some successful applications in the works, the biggest problem facing data-driven modelling is a lack of quality data. For many engineering systems, data are proprietary, and we don’t have such rich archives of data on which to train AI models.

Despite these challenges, data-driven modelling and machine learning open up many possibilities. Because data-driven modelling does not depend on the scales of the physical problems under consideration, it can provide engineering solutions and predictions at all scales, from the atomic through meso to macro. Moreover, the exceptional computational efficiency of machine learning, especially on GPUs, should eventually enable real-time simulations of engineering systems.

Combined with advanced measurement and sensors, the growth in computer power could enable the construction of ‘digital twins’, computer models of systems that can exchange data with their physical engineering embodiment. This would enable real-time investigation, intervention, control, and optimisation. Several CAE software companies, including Siemens and Ansys, have begun to do just this using NVIDIA’s Blackwell GPUs, specially developed for large language models and generative AI. These will allow engineers using the companies’ software to build real-time digital twins.

Most importantly, as with other techniques in the AI family, with larger training datasets, simulation based on machine learning could become increasingly accurate, reliable, intelligent, and autonomous. Data-driven modelling and simulation has great potential. We just need the data.

Contributors

Professor Kai Luo FREng, Chair of Energy Systems at UCL, and Founder and Principal Investigator for the UKCOMES since 2013, has pioneered modelling and simulation across scales for fundamental and applied research. He won the 2021 AIAA Energy Systems Award “for groundbreaking contributions to multiscale multiphysics modelling and simulation” and the 2025 ASME George Westinghouse Gold Medal “for groundbreaking contributions to digital and AI-enhanced analysis, control, prediction, design, and optimisation of energy and power systems”.

Get a free monthly dose of engineering innovation in your inbox

SubscribeRelated content

Software & computer science

Pushing the barriers to model complex processes

In 2007, Imperial College London spinout Process Systems Enterprise Ltd won the MacRobert Award for its gPROMS (general-purpose PROcess Modelling System) software. Costas Pantelides and Mark Matzopoulos, two of the key people behind the success of gPROMS, tell how they created a way in which engineers can harness physics, chemistry and engineering knowledge within a framework that solves highly complex mathematical problems.

Compact atomic clocks

Over the last five decades, the passage of time has been defined by room-sized atomic clocks that are now stable to one second in 100 million years. Experts from the Time and Frequency Group and the past president of the Institute of Physics describe a new generation of miniature atomic clocks that promise the next revolution in timekeeping.

The rise and rise of GPUs

The technology used to bring 3D video games to the personal computer and to the mobile phone is to take on more computing duties. How have UK companies such as ARM and ImaginationTechnologies contributed to the movement?

EU clarifies the European parameters of data protection

The European Union’s General Data Protection Regulation, due for adoption this year, is intended to harmonise data protection laws across the EU. What are the engineering implications and legal ramifications of the new regulatory regime?

Other content from Ingenia

Quick read

- Environment & sustainability

- Opinion

A young engineer’s perspective on the good, the bad and the ugly of COP27

- Environment & sustainability

- Issue 95

How do we pay for net zero technologies?

Quick read

- Transport

- Mechanical

- How I got here

Electrifying trains and STEMAZING outreach

- Civil & structural

- Environment & sustainability

- Issue 95